New Tool Helps Districts Evaluate Ed-Tech Companies’ Claims of ‘Evidence’

Ed-tech companies, take notice.

A new tool aims to help K-12 districts clearly and objectively measure product-effectiveness studies and research that commercial providers often claim provide evidence of their digital products’ value.

The tool was developed by the nonprofit Digital Promise in cooperation with researchers from Johns Hopkins University’s Center for Research and Reform in Education.

Its structure is a relatively simple, multiple-choice format, which asks school officials involved in purchasing decisions to consider 12 multiple-choice questions on the relevance, rigor, and design of product-evaluation studies — whether they’re conducted independently, paid for and overseen by companies themselves, or something in between.

If district officials are armed with that information, they’re likely to make more informed decisions about whether they need to stage pilots of those products, Digital Promise contends. It could be that they don’t need a pilot, and they can instead focus on other questions surrounding the purchase of the technology, such as cost, and how it meshes with their current IT systems.

Digital Promise, a nonprofit that focuses on improving schools through technology and research, has taken a strong interest in recent years in how K-12 systems buy ed-tech, the processes they follow in staging pilots — and why there seem to be so many derailments along the way.

The organization’s past research on procurement helped shape the decision to create the evaluation tool, Aubrey Francisco, Digital Promise’s research director, explained in an interview.

Districts tend to be skeptical of companies’ claims that their products are effective when vendors base their claims on research they’ve overseen themselves.

Companies, in turn, often say that school officials lack the expertise to judge the quality of the research they conduct on products, Francisco pointed out. The tool is meant to help “the supply side and the demand side” by providing clarity on how product research of ed-tech can be judged, she said.

“By strengthening both sides of the market, we can do a better job getting high-quality products into the hands of the students and teachers who need them,” Digital Promise said in an online post announcing the tool.

The audience for the evaluation tool could be any K-12 officials involved in making decisions about ed-tech purchasing – not just superintendents and tech directors, for instance, but also curriculum directors, tech coaches, and teams of teachers.

A Test of Relevance and Rigor

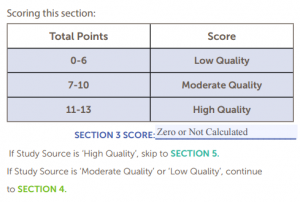

The tool puts a series of questions, available on Digital Promise’s website, to district officials. It’s divided into four sections, focused on product information, study relevancy, the study’s source, and study design.

Rather than simply taking K-12 officials through a string of questions, and spitting out a cumulative score, the tool asks users to answer questions, section-by-section, step-by-step, so that they make a careful judgement about a study of an ed-tech product’s effectiveness — and whether staging a pilot might help them.

For instance, the “Study Design” section asks users, “Was the data-collection tool appropriate for what the researchers wanted to measure?”

It then gives examples of possible tools, asks how many participants took part in a study, and whether there was a detailed explanation of study design, among other questions.

At the end of that section, if the study design is scored as high or moderate quality, district officials are advised that the results are relevant and useful.

And if it’s of low quality?

“Do not proceed,” it advises. “The study is not a reliable source of evidence. Instead, seek out other sources of evidence or consider running a pilot.”

Districts tend to be particularly skeptical of research conducted by companies on their own products. The tool doesn’t suggest that districts should automatically dismiss those studies, Francisco said; it instead tries to set clear standards for how K-12 officials should judge their design, and relevance.

Districts officials – and ed-tech developers – can give the tool a trial run, and judge its usefulness for themselves.

See also:

One thought on “New Tool Helps Districts Evaluate Ed-Tech Companies’ Claims of ‘Evidence’”