Ed. Department Aims to Accelerate Ed-Tech Evaluations

The Department of Education is asking for bids to design a prototype system to quickly evaluate ed-tech in K-12 schools, in hopes of making it easier for educators to figure out what works in products they purchase with federal funding.

“What does ‘what works’ mean?” asked Katrina Stevens, a senior advisor in the department’s office of educational technology, last week at a national meeting of educator-ed-tech partnerships in Chicago, as she discussed the general need for research in this area. “And who gets to decide what works?”

The research and implementation team chosen through the department’s request for proposals will tackle these and other central questions in its design of “rapid-cycle” evaluations—one- to three-month tests of ed-tech software. Beyond getting feedback on technology purchased with funds available under the Elementary and Secondary Education Act, the department wants to help schools and parents “make evidence-based decisions when choosing which apps to use with their students,” according to its announcement.

School leaders look for research-based evidence about what works, but they are often skeptical of studies funded by the companies that are trying to sell the products.

In looking for a standard, low-cost and quick-turnaround process, the department is also trying to “understand how to improve outcomes of ESEA programs,” it indicates, referring specifically to products purchased with funding from Titles I, II, and III and the Individuals with Disabilities Education Act.

The solicitation for a contract is, itself, on a rapid cycle. Released Aug. 12, the RFP carries a due date of Sept. 3. It doesn’t make reference to the size of the budget for the effort.

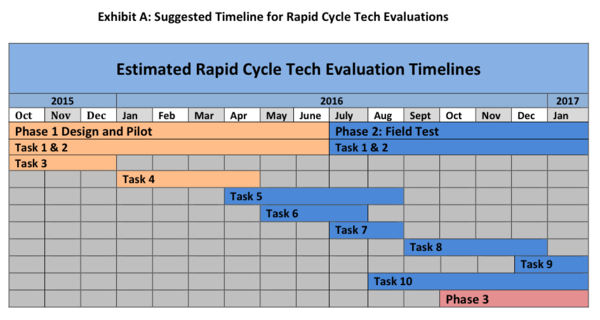

The team chosen will be required to:

- Establish a technical working group consisting of researchers, K-12 practitioners, and private sector ed-tech experts

- Develop a research design for conducting rapid-cycle tech evaluations

- Use this research design to conduct pilot evaluations in three to six schools or districts

- Prepare reports and disseminate findings after the evaluations are completed

- Refine the research design based on findings from the first phase of the project

- Select sites and apps for eight to 12 field tests using “a fair and transparent process”

- Field test selected apps

- Report findings from this phase

- Create an interactive guide and implementation support tool for conducting rapid-cycle tech evaluations.

Ultimately, the department is interested in moving the field forward from a “kicking the tires” approach to piloting products, to taking educational apps for “a real test drive,” Stevens said.

The projected 16-month timeline for the activities associated with this contract will run from October 2015 through January 2017.

The full RFP is posted here.

See also: